News

AI Robots and Website Content: A Strategic Guide

September 2, 2025

News

September 2, 2025

As artificial intelligence continues to reshape the digital landscape, website publishers face critical decisions about whether to allow AI robots to harvest their content. This document explores the complex considerations around AI web crawling, examining both the opportunities and challenges it presents for businesses and content creators.

AI robots, also known as web crawlers or bots, are automated programs that systematically browse and index web content. Unlike traditional search engine crawlers that are primarily focused on discovery and ranking, AI crawlers collect data to train large language models (LLMs) and power generative AI applications.

Enhanced Discoverability and Reach: Allowing AI robots to access your content can significantly expand your reach beyond traditional search engines. When AI models are trained on your content, they can reference and recommend your information to users across various AI-powered platforms and applications.

Thought Leadership and Authority Building: Content that becomes part of AI training data can establish your organization as an authoritative source in your field. AI systems may cite or reference your expertise when responding to related queries, positioning your brand as a trusted knowledge source.

Future-Proofing Your Digital Strategy: As AI-powered search and discovery mechanisms become more prevalent, early adoption of AI-friendly practices may provide competitive advantages. Organizations that embrace AI integration today may be better positioned for tomorrow's digital landscape.

Indirect SEO Benefits: While traditional SEO focuses on search engines, AI-accessible content can drive traffic through AI-powered recommendations, chatbots, and virtual assistants that reference your information.

Intellectual Property and Copyright Issues: AI training on your content raises complex questions about intellectual property rights. Your proprietary information, research, or creative work may be incorporated into AI models without direct attribution or compensation.

Content Monetization Challenges: If AI systems can provide answers derived from your content without directing users to your website, you may lose potential ad revenue, lead generation opportunities, and direct user engagement.

Competitive Intelligence Risks: Allowing broad AI access may inadvertently provide competitors with insights into your strategies, methodologies, or proprietary approaches through AI-generated responses.

Brand Control and Misrepresentation: AI systems may misinterpret, miscontextualize, or inaccurately represent your content, potentially damaging your brand reputation without your knowledge or control.

Resource Consumption: AI crawlers can consume significant server resources and bandwidth, potentially impacting site performance and increasing hosting costs.

In a recent Editor & Publisher interview about the launch of the Fideri News Network, leaders discussed how strategic digital integration and content accessibility can redefine regional news coverage. This same philosophy—balancing innovation with control—is central to how publishers can approach AI content access and automation today.

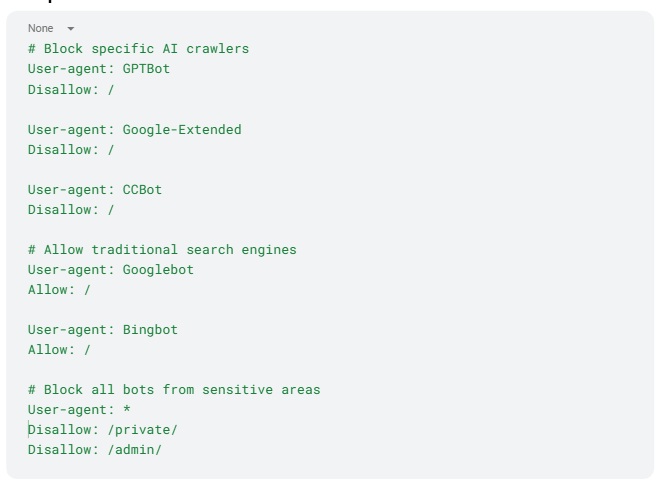

The robots.txt file serves as your website's first line of defense in controlling automated access. Located at your domain's root (e.g., yoursite.com/robots.txt), this file communicates crawling permissions to bots.

Keep in mind the following: a robots.txt file can help limit crawling by well-behaved web crawlers, but it has important limitations, especially regarding AI training:

Think of robots.txt as a first line of defense rather than a complete solution - it's helpful but not foolproof for preventing AI companies from accessing your content.

At ePublishing, we have additional ways to combat AI bots at the front end by utilizing Cloudflare features.

The LLMS.txt file represents an emerging standard for websites to communicate specifically with AI systems. Similar to robots.txt but designed for the AI era, this file can provide more nuanced instructions for language models.

LLMS.txt is a markdown file that websites can add to help Large Language Models (LLMs) better understand and use their content. Think of it as a "guidebook" for AI systems visiting your website.

Large language models face a critical limitation: context windows are too small to handle most websites in their entirety. Converting complex HTML pages with navigation, ads, and JavaScript into LLM-friendly plain text is both difficult and imprecise.

Imagine trying to read a book while someone keeps showing you advertisements and navigation menus between every sentence - that's what websites look like to AI systems.

The file sits at the root of your website (e.g., yoursite.com/llms.txt) and acts as a table of contents or summary for AI systems. It offers brief background information, guidance, and links to detailed markdown files. ePublishing can place an LLMS.txt file on your website if you wish. The best way to generate this is to use AI to generate this for you. A simple to use experience is Claude.ai, using this AI point the prompt at your web site and ask for Claude to create a LLMS.txt file for you. Review the file. Is this the information that you would like to be known for? Send this file to us and we will get it placed where search bots are looking for it.

The format is straightforward:

The "Optional" section has special meaning—URLs provided there can be skipped if a shorter context is needed. Use it for secondary information which can often be skipped.

LLMS.txt represents an early effort to create transparency and control over AI content usage, emerging as a technical tool to help AI better crawl and understand websites. It's gaining traction but isn't yet a universal standard like robots.txt.

Think of LLMS.txt as creating a "Cliff Notes" version of your website, specifically for AI systems, giving them the most important information in a clean, organized format they can easily understand and use to help people.

As AI-powered search and discovery tools become mainstream, traditional SEO is evolving into Generative Engine Optimization (GEO). This new discipline focuses on optimizing content for AI-generated responses rather than just search engine rankings.

Structured Information Architecture: Organize content in clear, hierarchical structures that AI systems can easily parse and understand. Use semantic markup and structured data to provide context. Continuum’s Taxonomy helps to guide this.

Authoritative Source Signals: Establish clear expertise, authoritativeness, and trustworthiness (E-A-T) signals that AI systems can recognize and value when determining source credibility. Make sure all images and content are properly attributed to your site. Bylines, Image Credits, and Image Alt Text are all supported in Continuum.

Comprehensive Topic Coverage: Create in-depth, comprehensive content that addresses topics holistically rather than focusing solely on keyword optimization. Focus on your leads and teasers, making sure that these areas describe the content.

Factual Accuracy and Citations: Ensure content accuracy and provide proper citations, as AI systems increasingly value verifiable information from credible sources. When quoting people, make sure you provide their roles and titles.

Develop an AI Content Policy: Create clear guidelines for how your organization will interact with AI crawlers, including which content to share and which to protect.

Implement Selective Access: Use robots.txt and emerging tools like LLMS.txt to allow beneficial AI access while protecting sensitive or proprietary information.

Focus on Quality and Authority: Invest in creating high-quality, authoritative content that provides value whether accessed by humans or AI systems.

Monitor AI References: Regularly search for how AI systems reference your content and brand to ensure accurate representation and identify opportunities.

Prepare for Attribution Changes: Develop strategies for brand recognition and traffic generation that don't rely solely on direct website visits.

Stay Informed: Keep abreast of evolving AI crawling standards, legal developments, and industry best practices as this landscape continues to develop.

Implement a Content Access Strategy: Continuum builds access controls directly into your website architecture. These integrated barriers ensure that AI crawlers can only access the content you want to make publicly available. While AI systems may still reference your content in their responses, accessing the full source material or diving deeper into your articles would require user authentication and login credentials.

This approach gives you greater control over how much of your content becomes part of AI training datasets while still allowing for some visibility and attribution when your content is referenced.

We are here to help empower, educate, and support the business decisions you make for your websites.

The decision to allow or restrict AI robot access to your website content requires careful consideration of your business goals, content strategy, and risk tolerance. While there are valid concerns about intellectual property and content monetization, there are also significant opportunities for increased reach and authority building.

The most successful approach likely involves selective permission rather than blanket acceptance or rejection. By thoughtfully implementing robots.txt restrictions, exploring LLMS.txt possibilities, and adapting to generative engine optimization principles, organizations can navigate this new landscape while protecting their interests and maximizing opportunities.

As AI continues to evolve, so too will the tools and strategies for managing AI-website interactions. The key is to remain flexible, informed, and strategic in your approach while staying true to your organization's core values and business objectives.